The machine learning approach to teach a computer to recognize natural language consists of training models based on texts of reference for every existing language. The assumed language of a given text will be the one that best fits the model. In practice, reliable language processing through simple statistical models is already within reach.

The simple language model we will use here consists of a frequency table of all $$n$$-grams of a text file. A $$n$$-gram is just a series of $$n$$ successive characters on the same line. Before the $$n$$-grams of a line are determined, all uppercase letters must first be turned into lowercase letters, white space in front and at the end must be removed and white space between words must be replaced by one space. White space is defined as a series of consecutive spaces and tabs. Newlines are not taken into the $$n$$-gram. Note that spaces and punctuation marks are a part of the $$n$$-gram.

To determine how closely the $$n$$-gram based language model is to the $$n$$-gram based language model of the text of reference $$r$$ (note that in both cases the same length must e used for the $$n$$-grams), the following score is calculated: \[ s_r^n(t) = \sum_{m \in \mathcal{N}_t^n} f_t^n(m) \log\left(\frac{1 + f_r^n(m)}{c_r^n}\right) \] Here $$\mathcal{N}_t^n$$ is the collection of all $$n$$-grams of the text $$t$$, $$f_t^n(m)$$ (resp. $$f_r^n(m)$$) is the number of times the $$n$$-gram $$m$$ occurs in the text $$t$$ (resp. $$r$$), and $$c_r^n$$ is the total amount of $$n$$-grams in the text of reference $$r$$. $$\log$$ is the natural logarithm. The higher the score, the better the language model of the text fits the language model of the text of reference.

Assignment

-

Write a function language_model that takes the location of a text file from which a simple language model can be trained. The function must return a dictionary that represents the frequency table of the language model as described in the introduction. The keys are formed by the $$n$$-grams of the text, and every $$n$$-gram is depicted by the dictionary according to the number of occurrences. As second optional argument that can be passed to the function is the value $$n$$ (by default the number of bigrams is calculated: $$n=2$$).

-

Write a function similarity that determines how close the $$n$$-gram based language model of a text $$t$$ fits the $$n$$-gram based language model of a text of reference $$r$$. To this function two arguments must be passed, namely the dictionaries of the language model for the texts $$t$$ and $$r$$. The score must be returned as a floating point number by the function.

-

Write a function languageprocessing with which the assumed language of a given text $$t$$ can be processed. As a fisrt argument, this function needs a dictionary that represents the language model of a given text $$t$$. The second argument that should be passed to the function is also a dictionary, with as a key a string with the description of a language and as corresponding value a dictionary with the language model that was determined based on a text of reference for that language. The function should return a tuple of which the two elements respectively are the description of the language (a string) and the score (a floating point number) that correspond with the language model from the dictionary — that was passed as second parameter — that best fits (highest score) text $$t$$. The language that corresponds with the highest score can be used as prediction of the language of the given text $$t$$.

Example

Click here1 to download a ZIP file that contains all files that are used on the examples below. The first example works with small files, so you can easily follow what is to be done.

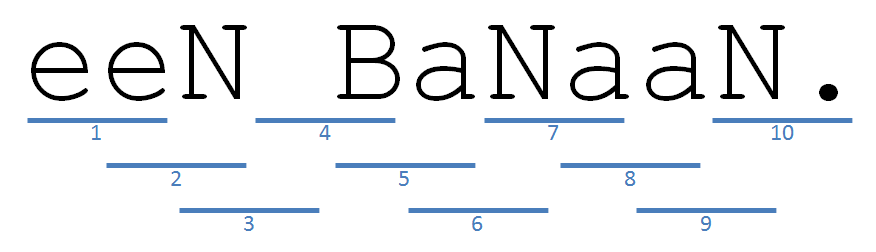

>>> language_model('fruit.nl')

{' b': 1, 'aa': 1, 'en': 1, 'ba': 1, 'n.': 1, 'ee': 1, 'na': 1, 'an': 2, 'n ': 1}

>>> language_model('fruit.en', 3)

{' ba': 1, 'ana': 2, 'a b': 1, 'na.': 1, 'nan': 1, 'ban': 1}

>>> language_model('fruit.fr', 4)

{'ne b': 1, 'une ': 1, 'e ba': 1, 'ane.': 1, 'nane': 1, 'bana': 1, 'anan': 1, ' ban': 1}

>>> model_nl = language_model('fruit.nl')

>>> model_en = language_model('fruit.en')

>>> model_fr = language_model('fruit.fr')

>>> similarity(model_nl, model_en)

-16.11228418967414

>>> similarity(model_nl, model_fr)

-18.7491848109244

>>> similarity(model_en, model_nl)

-13.450867444376364

>>> models = {'en': model_en, 'fr': model_fr}

>>> languageprocessing(model_nl, models)

('en', -16.11228418967414)In the following example the language models are trained based on the text Universal Declaration of Human Rights. According to the Guiness Book of Records, this is the most translated document. Here we can conclude the the bigram "e•" (where • is a space) occurs resp. 352 and 330 times in the Dutch and English translation of the text. The trigram that occurs the most in the English version "the", i.e. 151 times. Despite the simplicity of the method, we find that the language of all text files can be determined properly.

>>> model_en = language_model('training.en', 3)

>>> [(trigram, number) for trigram, number in model_en.items() if number == max(model_en.values())][0]

('the', 151)

>>> model_en = language_model('training.en')

>>> model_nl = language_model('training.nl')

>>> model_fr = language_model('training.fr')

>>> model_en['e '], model_fr['e '], model_nl['e ']

(330, 509, 352)

>>> text_en = language_model('test.en')

>>> similarity(text_en, model_en)

-230.33674380150703

>>> similarity(text_en, model_fr)

-259.04854263587083

>>> similarity(text_en, model_nl)

-261.7988606708526

>>> models = {'en': model_en, 'fr': model_fr, 'nl': model_nl}

>>> languageprocessing(text_en, models)

('en', -230.33674380150703)

>>> text_fr = language_model('test.fr')

>>> languageprocessing(text_fr, models)

('fr', -129.8597104636806)

>>> text_nl = language_model('test.nl')

>>> languageprocessing(text_nl, models)

('nl', -185.44606662491037)