Questions

Consider a simple function \(R(\beta) = sin(\beta) + \frac{\beta}{10}\).

- Create an R function

costthat returns the function value for some argumentbeta. (hint: use thesin()function) - Draw a graph of this function over the range \(\beta \in [-6, 6]\). (not tested)

- What is the derivative of this function?

Create an R function

cost_derivthat returns the derivative for some argumentbeta. (hint: \(sin'(x) = cos(x)\), i.e.cos()function) - Create an R function

gradient_descentwith argumentsnum_iters,learning_rate, andinit_value.

The function performs gradient descent fornum_itersiterations, starting from the initial valueinit_value, using a learning ratelearning_rate.

The function should return the vector of betas of lengthnum_iters + 1 - Given \(\beta^0 = 2.3\), run gradient descent for 50 iterations to find a local minimum of \(R(\beta)\) using a learning rate of \(\rho = 0.1\).

Store the vector in

beta_vec1. - Show each of \(\beta^0, \beta^1, \dots\) in your plot, as well as the answer. (not tested)

- Repeat with \(\beta^0 = 1.4\). This time, use 60 iterations and store the vector in

beta_vec2. - Show each of \(\beta^0, \beta^1, \dots\) in your plot, as well as the answer. (not tested)

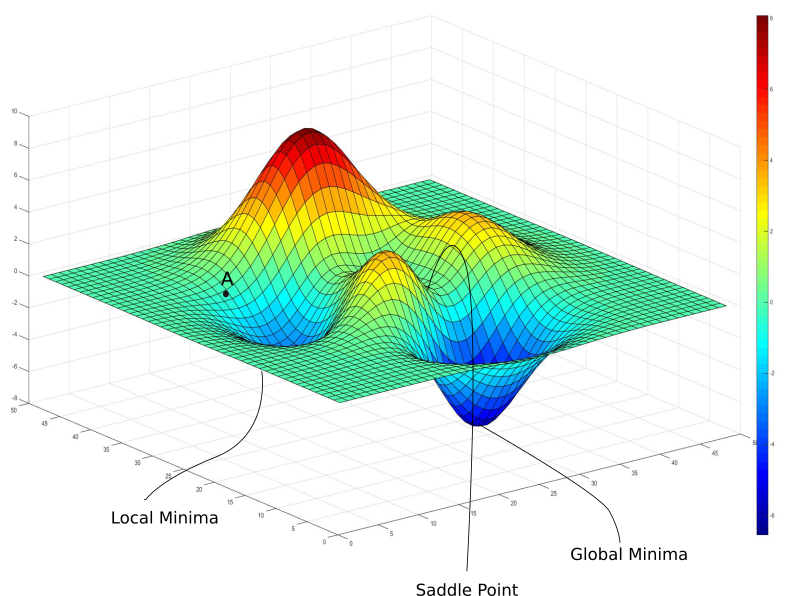

- MC1: Which one of the 2 initializations ends up in the global optimum?

- 1) \(\beta^0 = 2.3\)

- 2) \(\beta^0 = 1.4\)