Web Scraping with Rvest

In this exercise, we will learn how to scrape data from a webpage using the rvest package in R.

Web scraping is a method used to extract data from websites.

This can be done manually by a user or by an algorithm written in any programming language.

Setting Up Your Environment

Before we start, it’s a good idea to clear your working environment.

This can be done using the rm(list=ls()) command in R.

Next, we need to install and load the rvest package.

This package provides a set of functions that make it easy to scrape (or harvest) data from HTML web pages.

if (!require('pacman')) install.packages('pacman'); require('pacman',character.only=TRUE,quietly=TRUE)

p_load(rvest)

Defining the URL

The first step in web scraping is to define the URL of the webpage that you want to scrape. In this exercise, we will scrape the www.scrapethissite.com website.

url <- "https://www.scrapethissite.com/pages/simple/"

Downloading and Parsing the HTML File

After defining the URL, we can use the read_html() function to download and parse the HTML file of the webpage.

html <- url %>% read_html()

To see the structure of the HTML file, you can use the html_structure() function.

Extracting Data Next, we will extract all 250 country names on the webpage. For this, we will use the Chrome extension SelectorGadget1 to determine the relevant CSS selector.

After you have added the extension to your browser, go to the website and click on the SelectorGadget icon in the browser.

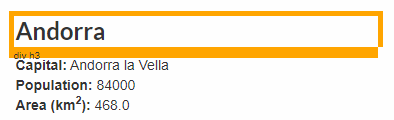

Your mouse cursor will now select the elements on the webpage.

Click near the one of the country names. This will highlight all the country names on the webpage since they all use the same CSS selector.

The CSS selector can be found at the bottom right of the browser window.

This selector is then passed to html_nodes(), which finds all nodes that match the selector.

nodes <- html %>% html_nodes('.country-name')

length(nodes)

This will return the number of nodes (in this case, number of country names) in the HTML file that match the selector.

Finally, use html_text() to extract the country names.

country_names <- nodes %>% html_text()

The country_names object now contains all 250 country names on the webpage,

but each string contains leading and trailing whitespace, and new lines.

We can use the str_trim() function to remove this.

p_load(stringr)

(country_names <- country_names %>% str_trim())

[1] "Andorra" "United Arab Emirates"

[3] "Afghanistan" "Antigua and Barbuda"

[5] "Anguilla" "Albania"

[7] "Armenia" "Angola"

[9] "Antarctica" "Argentina"

[11] "American Samoa" "Austria"

[13] "Australia" "Aruba"

[15] "Åland" "Azerbaijan"

...

Exercise

Scrape all the country capitals and store them in capitals.

Make sure you remove leading and trailing whitespace.

Assume that:

- The website can be found here2.

- The rvest package has been loaded.