The ROCR package can be used to produce ROC curves.

We first write a short function to plot an ROC curve

given a vector containing a numerical score for each observation, pred, and

a vector containing the class label for each observation, truth.

library(ROCR)

rocplot <- function(pred, truth, ...) {

predob <- prediction(pred, truth)

perf <- performance(predob, "tpr", "fpr")

plot(perf, ...)

}

SVMs and support vector classifiers output class labels for each observation.

However, it is also possible to obtain fitted values for each observation,

which are the numerical scores used to obtain the class labels. For instance,

in the case of a support vector classifier, the fitted value for an observation

\(X = (X_1,X_2,...,X_p)^T\) takes the form \(\hat\beta_0 + \hat\beta_1X_1 + \hat\beta_2X_2 + ... + \hat\beta_pX_p\).

For an SVM with a non-linear kernel, the equation that yields the fitted

value is given in \(f(x)=\beta_0+\sum_{i\in \mathcal{S}}\alpha_iK(x,x_i)\). In essence, the sign of the fitted value determines

on which side of the decision boundary the observation lies. Therefore, the

relationship between the fitted value and the class prediction for a given

observation is simple: if the fitted value exceeds zero then the observation

is assigned to one class, and if it is less than zero than it is assigned to the

other. In order to obtain the fitted values for a given SVM model fit, we

use decision.values=TRUE when fitting svm(). Then the predict() function

will output the fitted values.

svmfit.opt <- svm(y ~ ., data = dat[train,], kernel = "radial", gamma = 2, cost = 1, decision.values = T)

fitted <- attributes(predict(svmfit.opt, dat[train,], decision.values = TRUE))$decision.values

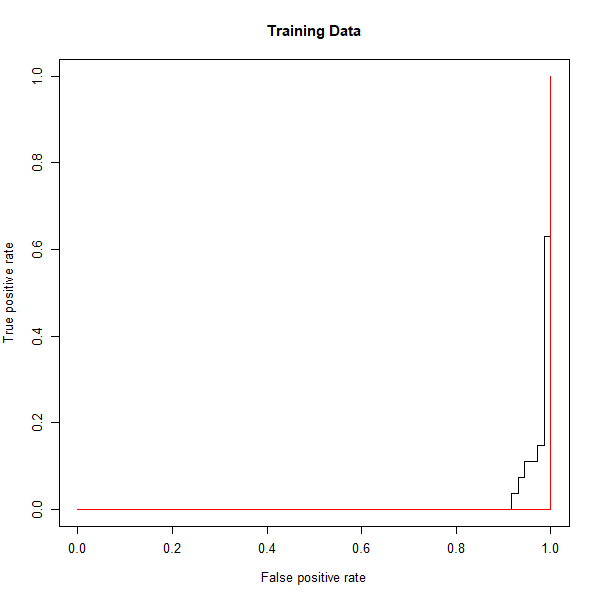

Now we can produce the ROC plot.

rocplot(fitted, dat[train, "y"], main = "Training Data")

SVM appears to be producing accurate predictions. By increasing \(\gamma\) we can produce a more flexible fit and generate further improvements in accuracy.

svmfit.flex <- svm(y ~ ., data = dat[train,], kernel = "radial", gamma = 50, cost = 1, decision.values = T)

fitted <- attributes(predict(svmfit.flex, dat[train,], decision.values = T))$decision.values

rocplot(fitted, dat[train, "y"], add = T, col = "red")

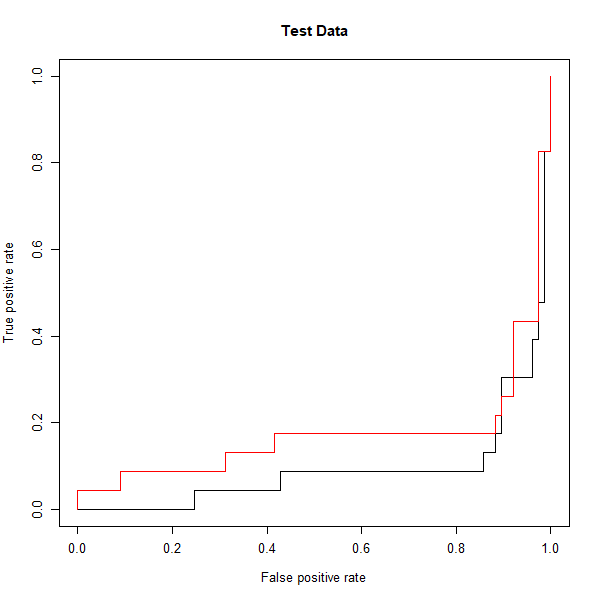

However, these ROC curves are all on the training data. We are really more interested in the level of prediction accuracy on the test data. When we compute the ROC curves on the test data, the model with \(\gamma = 2\) appears to provide the most accurate results.

fitted <- attributes(predict(svmfit.opt, dat[-train,], decision.values = T))$decision.values

rocplot(fitted, dat[-train, "y"], main = "Test Data")

fitted <- attributes(predict(svmfit.flex, dat[-train,], decision.values = T))$decision.values

rocplot(fitted, dat[-train, "y"], add = T, col = "red")