Extracting tweets

First, it is required to install and load packages to retrieve network data from Twitter.

if (!require("pacman")) install.packages("pacman") ; require("pacman")

p_load(rtweet, httr,tidyverse)

We have extracted 100 tweets about Samsung1.

load("samsung.Rdata")

tweets_data(tweets)

# A tibble: 100 x 68

user_id status_id created_at screen_name text source display_text_wi~

<chr> <chr> <dttm> <chr> <chr> <chr> <dbl>

1 1187925999389986816 16225356~ 2023-02-06 10:00:11 kanadianbe~ "C$6~ Shari~ 250

2 552548889 16225355~ 2023-02-06 10:00:01 koreatimes~ "Why~ Twitt~ 122

3 842682781541060608 16225355~ 2023-02-06 10:00:00 SamsungNew~ "Wit~ Twitt~ 228

4 842682781541060608 16225091~ 2023-02-06 08:15:00 SamsungNew~ "Sam~ Twitt~ 272

5 1409816184657047558 16225350~ 2023-02-06 09:57:50 BenJino14 "iPh~ Twitt~ 140

6 363578454 16225349~ 2023-02-06 09:57:36 ParagJadha~ "Wit~ Twitt~ 139

7 363578454 16225350~ 2023-02-06 09:57:44 ParagJadha~ "Own~ Twitt~ 144

8 2766218559 16225324~ 2023-02-06 09:47:35 leo_mukher~ "The~ Twitt~ 140

9 117067677 16225315~ 2023-02-06 09:44:07 Hulkamania~ "65”~ Twitt~ 140

10 1412737071396102147 16225314~ 2023-02-06 09:43:32 ilee81 "Cra~ Twitt~ 67

# ... with 90 more rows, and 59 more variables: reply_to_screen_name <chr>, is_quote <lgl>, is_retweet <lgl>,

# favorite_count <int>, retweet_count <int>, hashtags <list>, symbols <list>, urls_url <list>, urls_t.co <list>,

# urls_expanded_url <list>, media_url <list>, media_t.co <list>, media_expanded_url <list>, media_type <list>,

# ext_media_url <list>, ext_media_t.co <list>, ext_media_expanded_url <list>, ext_media_type <chr>, mentions_user_id <list>,

# mentions_screen_name <list>, lang <chr>, quoted_status_id <chr>, quoted_text <chr>, quoted_created_at <dttm>,

# quoted_source <chr>, quoted_favorite_count <int>, quoted_retweet_count <int>, quoted_user_id <chr>,

# quoted_screen_name <chr>, quoted_name <chr>, quoted_followers_count <int>, quoted_friends_count <int>, ...

Since we are interested in what the content is of the tweets, we extract the tweet texts.

text <- tweets_data(tweets) %>% pull(text)

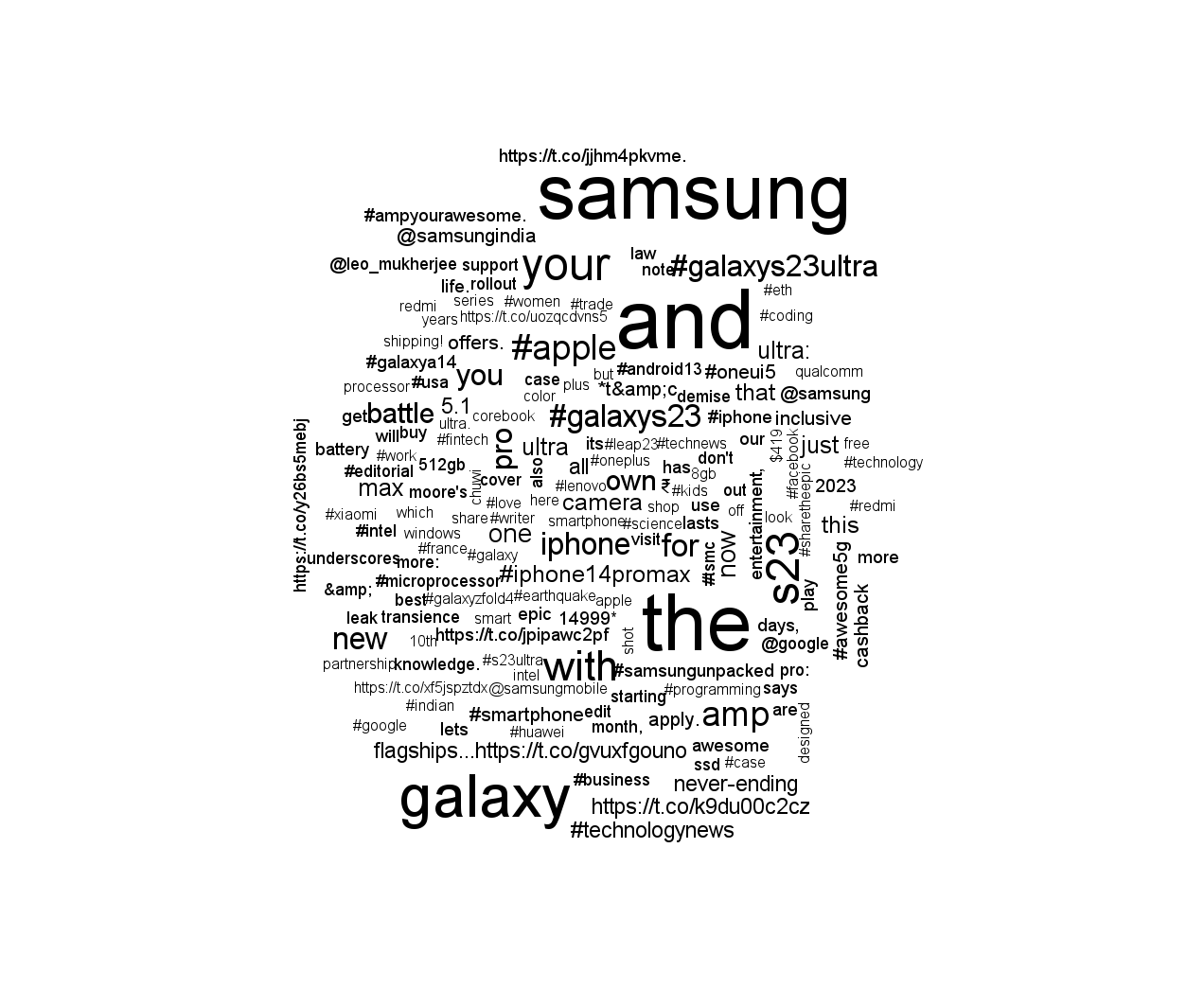

Creating word clouds

Load the following packages to create a word cloud. Wordcloud is the default package. Wordcloud2 is a fancier, updated version of wordcloud, although it has some bugs.

p_load(tm,wordcloud, wordcloud2)

We create a term-by-document matrix and count the word occurrences.

tdm <- TermDocumentMatrix(Corpus(VectorSource(text)))

m <- as.matrix(tdm)

v <- sort(rowSums(m),decreasing=TRUE)

d <- tibble(word = names(v),freq=v) #or: data.frame(word = names(v),freq=v)

We remove search string from results and order.

search.string <- "#samsung"

d <- d %>% filter(word != tolower(search.string)) %>% arrange(desc(freq))

Next, we can create a word cloud.

options(warn=-1) #turn warnings off

wordcloud(d$word,d$freq)

options(warn=0) #turn warnings back on

We can create the same word cloud, using the Wordcloud2 package. However, this will not work due to dirty text. Therefore, text preprocessing is necessary.

wordcloud2(d)

Exercise

Create a word cloud of the apple dataset and store it as wordcloud_apple.

Note: Copy your code in RStudio to see the output word cloud.

To download the apple dataset click

here2.

Assume that:

- The

appledataset is given. - The tm, rtweet, and wordcloud packages are loaded.